Have you ever wondered what the normal work tasks of a database developer/integration engineer looks like? If you have, then this is the post for you. This is a new series of posts where I simply give an overview of what I accomplished each week, giving insight into what life as a database developer looks like for those who might be curious. I also want to do these reviews for my own records and edification, because it’s always good to keep track of the things you accomplish at your job. This post is going to review the week of September 23 – September 27, 2024.

What’s in this post

- Meeting with a Software Vendor

- Updating Security Certificates

- Emergency Database Refresh

- Completing Database Permissions Requests

- Attending A Women’s Leadership Conference

- Troubleshooting a Virtual Machine (VM)/SQL Server Connection Issue

- Finishing and Presenting a Python Data Analysis Script

- Summary

Meeting with a Software Vendor

One part of my job is interacting with various software vendors that we buy products from. I’m currently on a longer-term project where we are doing a piece-by-piece upgrades to one application that our legal department uses, so this week I met with the vendor’s development team to get an overview of the next step of this upgrade process.

One of the joys of working with vendors is sometimes you go into these meetings thinking the purpose is completely different than what it ends up being, then you have to rethink everything you planned for the meeting on the fly. That was how this meeting went. I wasn’t expecting that I would be the one taking lead on driving the topic by asking questions we needed answers to on our side before we could move forward with the next step of the upgrade. I thought the meeting was going to be the vendor giving an overview of the product for my own learning, not me driving the conversation. As someone newer to the company, this change was a little scary, but I handled it well and got good information for our team from the vendor.

Updating Security Certificates

This part of technology is something I’m still not very familiar with but had to dive into this week. My team had an ETL that broke because it could no longer communicate with our customer’s software due to an outdated security certificate. To fix this broken ETL, I had to locate the correct updated certificate and put that on our ETL server and remove the old one. After making such a small change, things were back to working as they normally do. This problem was a good learning opportunity and not nearly as difficult as I was expecting it would be.

Emergency Database Refresh

I got to experience my first emergency refresh request in our Oracle database environment this week because something went wrong with one of our production databases and the app development team wanted to refresh the database into the lowers as quickly as possible to start troubleshooting. I think fate decided we should have a good day, because our Oracle cloning process into our test environments went off without any issues and finished in about an hour, which is almost a record low. We’ve ran into numerous issues in recent months with the pluggable database cloning process in Oracle, so we are very thankful that one of those problems didn’t arise when we needed this emergency refresh.

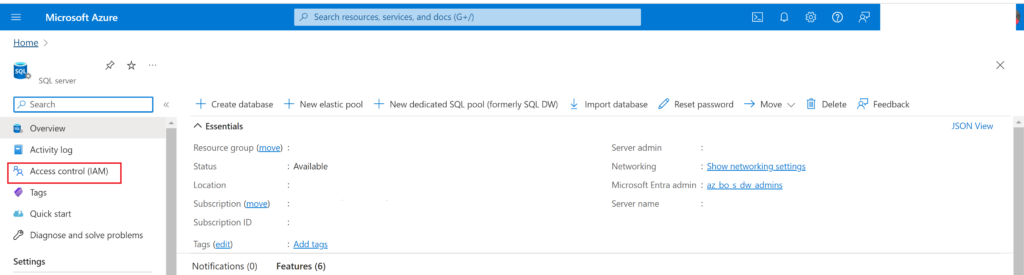

Completing Database Permissions Requests

Another piece of Oracle work I got to experience for the first time this week was updating user permissions. We received a request from one of our application developers that they needed a service account to be granted the same level of permissions that his own account had (in test) so that he could complete a task. Although the SQL needed to complete a permissions change in Oracle looks a little different from what I’m used to in SQL Server, it overall was very similar to making permissions changes with T-SQL so was easy to complete.

Attending A Women’s Leadership Conference

My favorite part of this week was getting to spend Tuesday and Wednesday at the Women & Leadership Conference by the Andrus Center at Boise State. This was my first time attending this conference, and I was able to go with the handful of other women in the IT department.

As would be expected for this type of conference, the sessions focused on building soft skills in women instead of hard technical skills like previous work conferences I’ve attended. I listened to a range of women speakers, all of whom are in various leadership roles in different fields and states across the country. I wouldn’t say I loved every session I attended, but most of them were interesting and I learned a lot of tips for managing in the work environment as a woman that I hadn’t thought of before. In the near future, I will be writing a recap with more of my learnings from the conference, if you’re interested in hearing more.

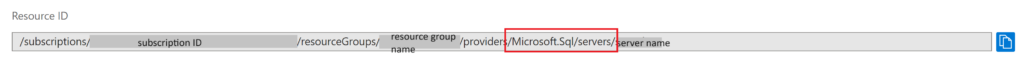

Troubleshooting a Virtual Machine (VM)/SQL Server Connection Issue

This issue was not something I had ever considered before, because I’ve never had to think about the networking setup for VMs that I’ve worked with. Normally, someone else sets up the VM and makes sure it has all the networking and firewall rules needed before we get access to the VM. However, one of my project teammates and I found out that it’s not always the case.

I am working on a different application upgrade project from the one I mentioned above, and I was in charge of setting up those SQL Servers last week. This week, the main application developer started his portion of setting up the VMs we put the SQL Servers on, and he found that he was unable to access the SQL Server from his local computer version of SQL Server Management Studio (SSMS). When he messaged me about this connection issue, I had no idea where to start troubleshooting it since I had never had to think about how SSMS connects to the SQL Server on the VM before.

I had to work with our networking team to figure out the issue, which ended up being that the local firewall on the VM was not setup to allow ingress from the two ports that SSMS/SQL Server requires for connections. The networking team wrote two commands to allow that ingress, ran the commands on all 3 servers we setup last week, and then we were able to easily connect to the new SQL Server instances from our local SSMS apps instead of having to login to the VMs directly.

Finishing and Presenting a Python Data Analysis Script

One of the things I was most pleased and excited about this week was finally getting to demo a complicated Python script I wrote to optimize customer orders based on their previous order history, given a list of input parameters from the customer. I have spent months working on this script, going back and forth with the business users about what should be included, adding new features when requested, and even totally reworking the algorithm when their requests got more advanced. My demo of the script had an audience of the business users as well as members of my own team, including my manager.

The demo itself went really well, the script worked exactly as I wanted it to, running in under 5 seconds to optimize the customer data and provide a recommendation for what should be stocked to fulfill those orders, which is a massive improvement from the current process that takes a week to calculate the best possible solutions. What did not go as I expected was hearing from the business users that they are unsure if the solution provided is accurate enough, so they’re afraid to move forward with my script unless I did several things that would likely lead to several more months of rework. At the end of the meeting, we had decided as a team that instead of implementing this solution I worked hard on for months, that isntead we would put their current process onto a newer and beefier server in hopes that it would run faster than the current week.

No developer wants to spend months working on something just to be told that it won’t be used due to reasons that are out of the developer’s control, but that seems to be the situation I am in now. I am staying positive about it though, because it was a great Python development learning opportunity for me when I came into the company, and I have faith that with time, the business users will come around to using the faster and more modern solution when they see that the results I produced are very close to what they already get with the current solution. I might need to do a little more tweaking to get my algorithm’s results into an acceptable range compared to the current process, but I am hoping it won’t require a full rework to do so.

Summary

Sometimes I have weeks at work where I feel like I haven’t accomplished all that much. This week was one of those. But now that I have typed out everything I did like this, I am seeing that I do a LOT of work while I’m at work, and I am proud of everything I learned and accomplished this week, even if every single work item did not go as planned.

Being a database developer or data integration engineer comes with a lot of variation in work, which you can probably see by looking at this week’s and last week’s summaries. There is always something new to learn and work on, so I’m excited I’ve had another interesting week of work and I look forward to next week being interesting as well. (Although I’m technically on vacation next week, so really I mean the following week.)

Do you have any questions about what a database developer does day-to-day that I haven’t answered yet? Let me know in the comments below!