As many of you probably already know, my cloud development career started in AWS, which I worked with for just about 3 years while I worked at Scentsy. Since my recent transition to a new job at a different company, I have started to develop in Azure instead, and it’s been a learning journey. Although both platforms allow for cloud development and processing, they have quite a few notable differences in what is offered and how they offer it, which is what I’m going to cover in this post today. My goal for this list isn’t to have a technical or all-inclusive list of the differences, but more of a difference a developer might feel in their own work if they make the same switch that I have.

What’s in this post:

- Azure seems simpler

- Azure has better cloud ETL development

- More on Azure Data Factory

- Power Automate & Logic Apps

- Copilot isn’t what they want it to be

- Unintuitive service naming

- Summary

Azure seems simpler

Azure is simpler yet still robust. Sometimes I feel like AWS tries to overcomplicate their services in order to make them seem fancier or more cutting-edge. And it also seems like they split what could be one service into multiple just to increase their total service count. Azure combines multiple functions I was used to in AWS into a single service. An example of that is Azure DevOps, which combines your ticketing/user story system with your DevOps pipelines and your Git (or other) repos. In my past job, we used TeamCity and Octopus Deploy for the pipelines, Jira for the ticketing, and Bitbucket to store our code, so I was a little confused my first couple of weeks in my new role since everything seemed to only be in one location. But I now find it nice and easier to work with.

Azure has better cloud ETL development

In the Azure cloud platform, there is a service called Synapse Workspace or Synapse Studio, and a second service called Azure Data Factory, which both allow you to create ETL pipelines right in the cloud. AWS has Glue, but that really doesn’t seem to have the same feel or capabilities that either Synapse or Azure Data Factory (ADF) has in the Azure realm. I have already updated and created several pipelines in each of those services in Azure and I really enjoyed working with them because they were very intuitive to get working with as a newbie and I could do everything I needed for the ETL right in the cloud development workspace.

When I worked with Glue in the past, it definitely did have some limited capabilities for making drag-and-drop ETLs in the cloud, but the service seemed to have a lot of limits which would force you to start writing custom PySpark code to make the data move. While writing custom code is also possible with Synapse and ADF, they both are built with more robust built-in components that allow you to make your ETLs quickly without writing any more custom code than a few SQL queries. I have really been enjoying working in these new services instead of AWS’ Glue.

More on Azure Data Factory

Another reason why I have been enjoying working with Azure Data Factory (ADF) is because it seems to be a modern version of the SSIS I am already familiar with, and located in the cloud instead of on an ETL server and local developer box. Although the look of ADF isn’t exactly the same as SSIS, it still is the drag-and-drop ETL development tool I love working with. And since it’s developed by Microsoft, you get all the best features available in SSIS ETL development without having to work with the old buggy software. I’m sure as I keep working with ADF that I’ll find new frustrating bugs that I’ll need to work around, but my experience with it so far has been only positive.

Power Automate & Logic Apps

Two other tools that aren’t available in the AWS ecosystem and that don’t seem to have an analog in AWS are Power Automate and Logic Apps. While these tools are more aimed at people who are not developers, to allow them to automate some of their daily work, they are interesting and useful features for certain scenarios and I am enjoying learning about them and playing with them. One of the best parts about working with Azure services is that it’s fully integrated into the entire Microsoft ecosystem, so you can pull in other non-Azure Microsoft services to work with Azure and expand your horizons for development. I’m not sure yet that I would 100% recommend working with Power Automate or Logic Apps for task automation (I’m still not done learning it and working with it), but it at least is another option to fall back on in the Microsoft realm that isn’t available in AWS.

Copilot isn’t what they want it to be

While most of my experience with Azure so far is positive, there are a couple annoying things I’ve noticed that I think are worth sharing, although neither of them are so egregious that it would prevent me from recommending working with this platform.

The biggest negative about Azure for me so far is that Microsoft keeps trying to shove Copilot (their AI assistance tool which seems only slightly more advanced than Clippy) into every single product they offer even when it provides no benefit or actually detracts from your total productivity. The perfect example of this is the “New Designer” for Power Automate. For some unknown reason, Microsoft has decided that instead of allowing you to do a drag-and-drop interface for task components to build your automation flow, everyone should instead be required to interact with Copilot and have it build your components instead. That might be useful if you had already been working with Power Automate in the past so knew what capabilities and components it offered. But as someone totally new to this space who is trying to learn how to use the tool and has no idea what is currently possible to develop, it feels basically impossible to communicate with the AI in any meaningful way in order to build what I want. I don’t know what to ask it to create when I’ve never seen a list of tasks that are available. Luckily, for now it is possible to toggle off the “New Designer” and switch back to the old that allows you to add each individual component as you go and select those components from a list which gives you a short description of what each does. Maybe in the future I’ll be more open to using Copilot with everything I develop, but right now, as a new developer in Azure, it doesn’t work for me.

Unintuitive service naming

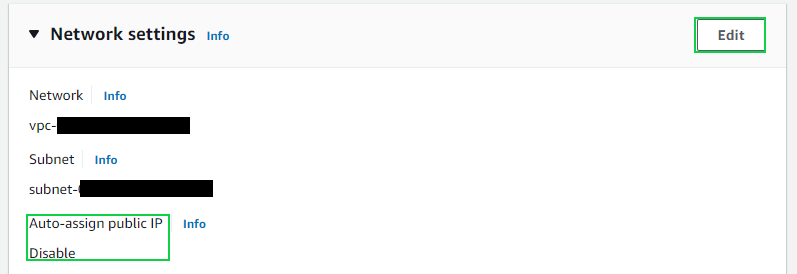

The only other nitpick I have about the Azure and Microsoft cloud ecosystem is that sometimes, the names they pick for their services don’t make sense, are confusing, or are the same thing as a totally different service. Microsoft doesn’t seem to be that great at naming things to make them understandable at a quick glance, but I suppose that can also be attributed to the desire of all cloud computing companies to make themselves look modern and cutting-edge.

The best example I can give of this phenomenon right now is that a data lake in Azure is built on what are called Storage Accounts, which is the blob storage service within Azure. It’s not as confusing to me now that I’ve been dealing with it for a month and a half, but that name doesn’t seem at all intuitive to me. Each time my colleagues directed me to go to the “data lake” I would get confused as to where I was supposed to navigate since the service I would click into was called Storage Accounts instead.

Summary

Although it felt like such a big switch in the beginning to move from an AWS shop to an Azure shop, I have already started to enjoy developing in Azure. It has so much to offer in terms of cloud ETL development and I can’t wait to keep learning and growing with these tools. I’ve already compiled so many things that I can’t wait to share, so I am hoping I will get those posts ready and posted soon so others can learn from my new Azure developer struggles.