When I started my blog a couple months ago, I determined a posting schedule for myself of once a week on Tuesdays. If you are someone who notices details and patterns, you may have noticed that I went a few weeks without posting at the end of December and into January, but it wasn’t for lack of me trying. In this post I’m going to talk about the issues I faced that prevented me from posting and how I finally got a workaround for the problem that has finally allowed me to start posting again.

Background

While trying to create and edit my tutorial post about working with Liquibase, I kept running into the same vague JSON error when trying to save drafts, always at the same point. The error was: “Updating failed. The response is not a valid JSON response”.

The point at which it always failed was about midway through the post, either when I copied in text that contained double-quote characters or when I added the first image. I spent several weeks going back and forth with Flywheel (the web host I use) support, trying to figure out what was causing this JSON error when I tried to save a draft.

We tried many different things, including the normal troubleshooting steps of disabling plugins, reverting to the default WordPress theme, disabling all of my browser extensions, trying to work in an incognito window, and several other things. I read so many of the articles online discussing how to resolve this error, and none of those solutions worked. On the Flywheel side, they told me they were trying several different server changes, including extending timeout values and other firewall type changes. I even completely restored my website to default, but not even that worked. And then I had to rebuild everything on my website (except for posts and pages which I exported before restoring).

As of the time of writing this, I still don’t officially have a resolution from Flywheel for this error that keeps popping up and generating 403 errors on the backend (I figured that out myself by inspecting what happened when I clicked the “save draft” button). However, thanks to some kind folks on a WordPress forum, I finally figured out a viable workaround that I am actually enjoying using more than the online WordPress admin portal.

The Successful Workaround

The workaround that I am now using, even as I write this, is to use the development tool for WordPress called Local. This tool gives you a local development environment for WordPress that can seamlessly connect with Flywheel and other hosting providers. The setup for the tool was super easy, took me less than 5 minutes. After going through the setup, all I had to do was pull a copy of my site to local and then I have been able to locally edit my website through the tool.

Once you’re done making all your changes, you only need to select to “Push” to your production site, then select which changes you want to be pushed, then it’s quickly sent to your actual production website. It takes a few minutes for Flywheel to update with the changes, but then you can see your locally developed changes on your real site. All without the hassle of the ever-present JSON error when trying to save a draft.

I will be continuing to work with Flywheel to try to resolve the error on my production site, but at least for now, I am able to keep working and posting, back to my normal schedule.

Summary

If you are facing endless errors when trying to create posts for WordPress and you’re hosted with Flywheel, try using the Local tool to develop on your own local machine to see if that helps you in the same way it helped me.

UPDATE

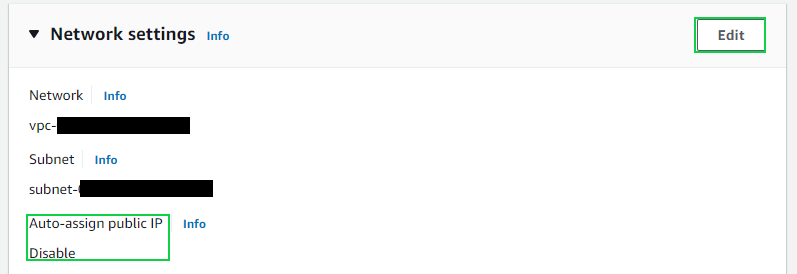

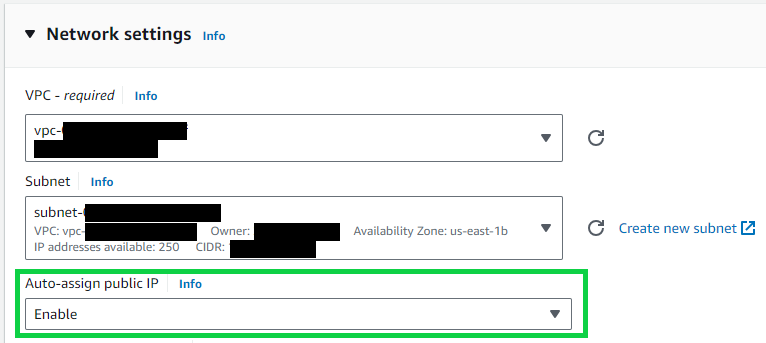

We finally seem to have resolved my issue after going back and forth so many times with the Flywheel support team and continuing to escalate it through all their support levels. I’m not sure if this is what finally resolved the issue as I wasn’t provided any further details, but the last detailed message I received seemed to indicate that something was set up incorrectly with their Web Application Firewall (WAF) that was preventing me from editing. Before getting the issue fixed, I also found that I couldn’t edit the footer of my site because I was also getting 403 errors when trying to save those changes, and that along with my post creation issue has been resolved.

The moral of this update is that if you’re having this same issue and your site is hosted on Flywheel, keep pushing them to try more things on their end, don’t let them say that it must be something with your local environment. They were super great in continuing to try as long as I kept pushing and saying it still wasn’t working. It also seemed to help when I started showing them the exact errors I was getting with the Inspect tool in Chrome so they would have something to work with.